Enhancing Spatial Perception and Presence in Immersive Virtual Environments

Enabling accurate spatial perception in VR is critical to ensuring the integrity of immersive virtual environments as a practical tool for architectural design and evaluation. After our discovery, in 2006, of the key role of cognitive factors in distance perception accuracy in VR, our group has been working on the development of strategies to facilitate veridical spatial understanding by evoking affordances for natural, embodied interaction in HMD-based VR. Our current efforts focus on providing people with a high-fidelity self-embodiment using simple, low-cost technology.

|

Egocentric Distance Judgments in Full-Cue Video-See-Through VR Conditions are No Better than Distance Judgments to Targets in a Void, Koorosh Vaziri, Maria Bondy, Amanda Bui and Victoria Interrante (2021)

Proceedings of the IEEE Virtual Reality Conference, pp. 1-9, doi:10.1109/VR50410.2021.00056.

[article]

[abstract]

|

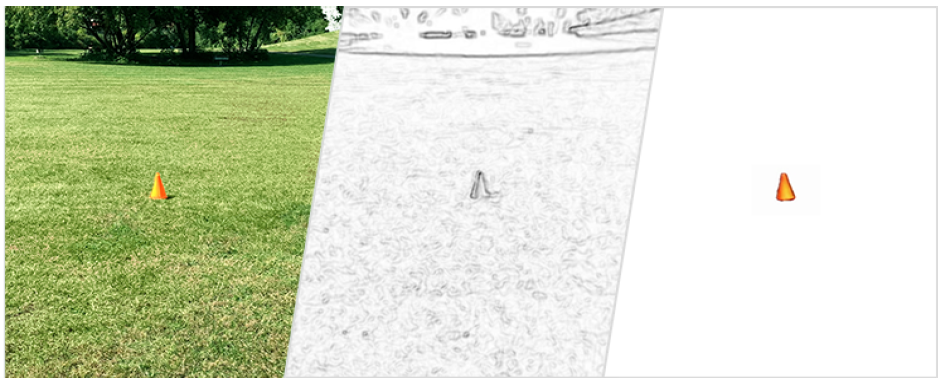

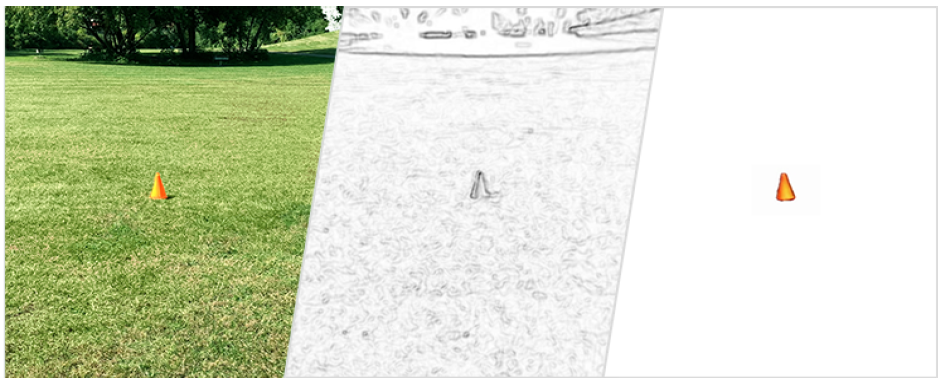

Understanding the extent to which, and conditions under which, scene detail affects spatial perception accuracy can

inform the responsible use of sketch-like rendering styles in applications such as immersive architectural design

walkthroughs using 3D concept drawings. This paper reports the results of an experiment that provides important

new insight into this question using a custom-built, portable video-see-through (VST) conversion of an

optical-see-through head- mounted display (HMD). Participants made egocentric distance judgments by blind

walking to the perceived location of a real physi- cal target in a real-world outdoor environment under

three different conditions of HMD-mediated scene detail reduction: full detail (raw camera view), partial detail

(Sobel-filtered camera view), and no detail (complete background subtraction), and in a control condition

of unmediated real world viewing through the same HMD.

Despite the significant differences in participantsŅ ratings of visual and experiential

realism between the three different video-see- through rendering conditions, we found no

significant difference in the distances walked between these conditions. Consistent with

prior findings, participants underestimated distances to a significantly greater extent

in each of the three VST conditions than in the real world condition. The lack of any

clear penalty to task performance accuracy not only from the removal of scene detail,

but also from the removal of all contextual cues to the target location, suggests that

participants may be relying nearly exclusively on context-independent information such

as angular declination when performing the blind-walking task. This observation

highlights the limitations in using blind walking to the perceived location of a target

on the ground to make inferences about peopleŅs understanding of the 3D space of the

virtual environment surrounding the target. For applications like immersive architectural

design, where we seek to verify the equivalence of the 3D spatial understanding derived

from virtual immersion and real world experience, additional measures of spatial

understanding should be considered.

|

The Influence of Avatar Representation on Interpersonal Communication in Virtual Social Environments, Sahar Aseeri and Victoria Interrante (2021)

IEEE Transactions on Visualization and Computer Graphics, 27(5):2608-2617.

[article]

[abstract]

|

Current avatar representations used in immersive VR applications lack features that may be important for

supporting natural behaviors and effective communication among individuals. This study investigates the

impact of the visual and nonverbal cues afforded by three different types of avatar representations in the

context of several cooperative tasks. The avatar types we compared are No Avatar (HMD and controllers only),

Scanned Avatar (wearing an HMD), and Real Avatar (video-see-through). The subjective and objective measures

we used to assess the quality of interpersonal communication include surveys of social presence,

interpersonal trust, communication satisfaction, and attention to behavioral cues, plus two behavioral

measures: duration of mutual gaze and number of unique words spoken. We found that participants reported

higher levels of trustworthiness in the Real Avatar condition compared to the Scanned Avatar and No Avatar

conditions. They also reported a greater level of attentional focus on facial expressions compared to the

No Avatar condition and spent more extended time, for some tasks, attempting to engage in mutual gaze

behavior compared to the Scanned Avatar and No Avatar conditions. In both the Real Avatar and Scanned

Avatar conditions, participants reported higher levels of co-presence compared with the No Avatar condition.

In the Scanned Avatar condition, compared with the Real Avatar and No Avatar conditions, participants

reported higher levels of attention to body posture. Overall, our exit survey revealed that a majority

of participants (66.67%) reported a preference for the Real Avatar, compared with 25.00% for the

Scanned Avatar and 8.33% for the No Avatar. These findings provide novel insight into how a user's

experience in a social VR scenario is affected by the type of avatar representation provided.

|

Am I Floating or Not?: Fidelity of Eye Height Perception in HMD-based Immersive Virtual Environments, Zhihang Deng and Victoria Interrante (2019)

ACM Symposium on Applied Perception, article 14.

[article]

[abstract]

|

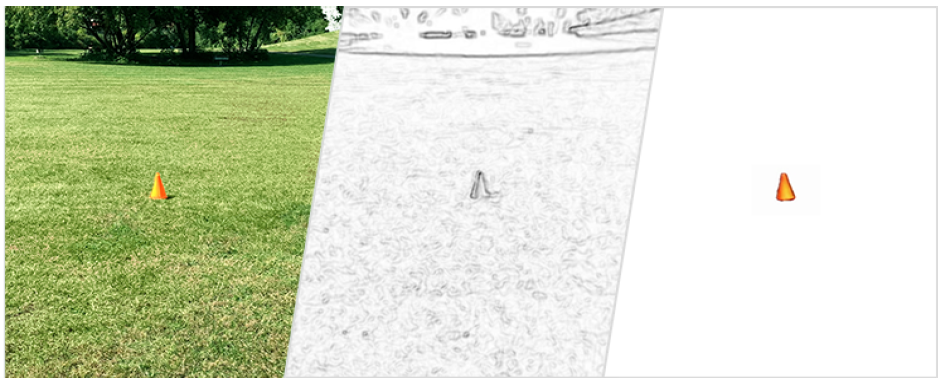

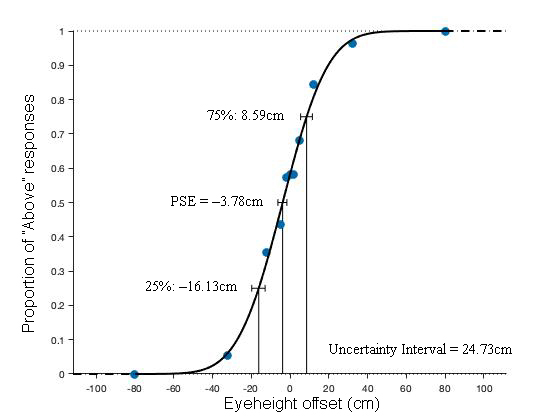

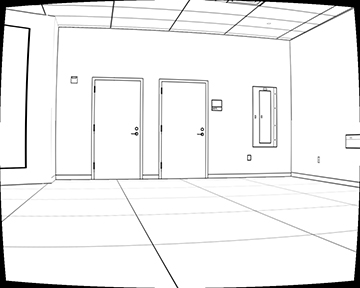

Previous work has found that eye height manipulations can affect people's

judgments of object size in real and virtual environments, as well as their

judgments of egocentric distances in HMD-based immersive virtual environments

(VR). In this short paper, we explore people's sensitivity to eye height

manipulations using a forced-choice task in a wide variety of different

virtual architectural models to seek a better understanding of the range of

eye height manipulations that can be surreptitiously employed under different

environmental conditions.

We exposed each of 10 standing participants to a total of 121 randomly-ordered

trials, spanning 11 different eye height offsets ranging from -80cm to +80cm,

in 11 different highly detailed virtual indoor environments, and asked them to

report whether they felt that their (invisible) feet were floating above or

sunken below the virtual floor. We fit psychometric functions to both the

pooled data and to the data from each virtual environment separately, as well

as to the responses from each participant individually. In the pooled data,

we found a point-of-subjective-equality (PSE) very close to zero

(-3.8cm), and 25% and 75% detection thresholds of -16.1cm and +8.6cm

respectively, for an uncertainty interval of 24.7cm. We also observed

some interesting variations in the PSEs and uncertainty intervals across

the individual rooms, which we discuss in more detail in the paper. We hope

that our results can help to inform VR developers about users' sensitivity to

incorrect eye height placement, to elucidate the potential impact of various

features of interior spaces on people's tolerance of eye height manipulations,

and to inform future work seeking to employ eye height manipulations to address

problems of distance underestimation in VR.

|

Investigating the Influence of Virtual Human Entourage Elements on Distance Judgments in Virtual Architectural Interiors, Sahar Aseeri, Karla Paraiso

and Victoria Interrante (2019) Frontiers in Robotics and AI: Virtual Environments: Best Papers from EuroVR 2017,

volume 66.

[article]

[abstract]

|

Architectural design drawings commonly include entourage elements: accessory

objects, such as people, plants, furniture, etc., that can help to provide a

sense of the scale of the depicted structure and "bring the drawings to life"

by illustrating typical usage scenarios. In this paper, we describe two

experiments that explore the extent to which adding a photo-realistic,

three-dimensional model of a familiar person as an entourage element in a

virtual architectural model might help to address the classical problem of

distance underestimation in these environments. In our first experiment, we

found no significant differences in participants' distance perception accuracy

in a semi-realistic virtual hallway model in the presence of a static or

animated figure of a familiar virtual human, compared to their perception of

distances in a hallway model in which no virtual human appeared. In our second

experiment, we found no significant differences in distance estimation accuracy

in a virtual environment in the presence of a moderately larger-than-life or

smaller-than-life virtual human entourage model than when a right-sized virtual

human model was used. The results of these two experiments suggest that virtual

human entourage has limited potential to influence peoples' sense of the scale

of an indoor space, and that simply adding entourage, even including an

exact-scale model of a familiar person, will not, on its own, directly evoke

more accurate egocentric distance judgments in VR.

|

A Virtual Reality Investigation of the Impact of Wallpaper Pattern Size on Qualitative Spaciousness Judgments and Action-based Measures of Room Size Perception, Governess Simpson, Ariadne Sinnis-Bourozikas, Megan Zhao, Sahar Aseeri

and Victoria Interrante (2018) EuroVR 2018: Virtual Reality and Augmented Reality,

Springer LNCS, volume 11162, pp. 161-176.

[PDF]

[abstract]

|

Visual design elements influence the spaciousness of a room. Although wallpaper

and stencil patterns are widely used in interior design, there is a lack of

research on how these surface treatments affect people's perception of the

space. We examined whether the dominant scale of a wallpaper pattern (i)

impacts subjective spaciousness judgments, or (ii) alters action-based measures

of a room's size. We found that both were true: participants reported lower

subjective ratings of spaciousness in rooms covered with bolder (larger scale)

texture patterns, and they also judged these rooms to be smaller than

equivalently-sized rooms covered with finer-scaled patterns in action-based

estimates of their egocentric distance from the opposing wall of the room. This

research reinforces the utility of VR as a supporting technology for

architecture and design, as the information we gathered from these experiments

can help designers and consumers make better informed decisions about interior

surface treatments.

|

Can Virtual Human Entourage Elements Facilitate Accurate Distance Judgments in VR?, Karla Paraiso and Victoria Interrante (2017) EuroVR 2017: Virtual Reality and Augmented Reality, Springer LNCS, volume 10700, pp. 119-133.

[PDF]

[abstract]

|

Entourage elements are widely used in architectural renderings to provide a

sense of scale and bring the drawings to life. We explore the potential of

using a photorealistic, three-dimensional, exact-scale model of a known person

as an entourage element to ameliorate the classical problem of distance

underestimation in immersive virtual environments, for the purposes of enhancing

spatial perception accuracy during architectural design reviews.

|

Dwarf or Giant: The Influence of Interpupillary Distance and Eye Height on Size Perception in Virtual Environments, Jangyoon Kim and Victoria Interrante (2017) ICAT-EGVE International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments, pp. 153-160. [Best Paper]

[PDF]

[abstract]

|

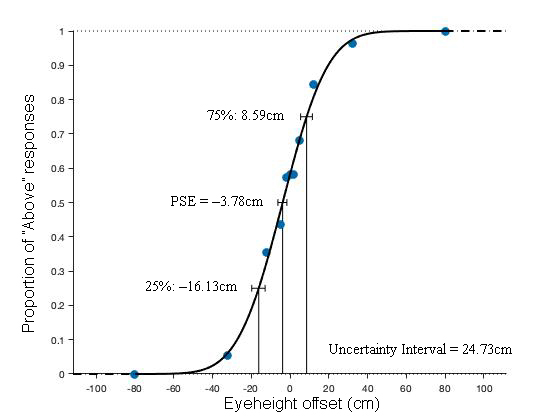

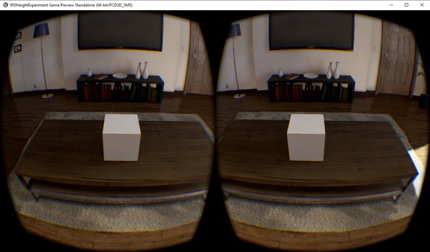

This paper addresses the question: to what extent can deliberate manipulations

of interpupillary distance (IPD) and eye height be used in a virtual reality

(VR) experience to influence a user's sense of their own scale with respect to

their surrounding environment - evoking, for example, the illusion of being

miniaturized, or of being a giant? In particular, we report the results of an

experiment in which we separately study the effect of each of these body scale

manipulations on users' perception of object size in a highly detailed,

photorealistically rendered immersive virtual environment, using both absolute

numeric measures and body-relative actions. Following a real world training

session, in which participants learn to accurately report the metric sizes of

individual white cubes (3"-20") presented one at a time on a table in front

of them, we conduct two blocks of VR trials using nine different combinations of

IPD and eye height. In the first block of trials, participants report the

perceived metric size of a virtual white cube that sits on a virtual table, at

the same distance used in the real-world training, within in a realistic virtual

living room filled with many objects capable of providing familiar size cues.

In the second block of trials, participants use their hands to indicate the

perceived size of the cube. We found that size judgments were moderately

correlated (r = 0.4) between the two response methods, and that neither

altered eye height (± 50cm) nor reduced (10mm) IPD had a significant

effect on size judgments, but that a wider (150mm) IPD caused a significant

(μ = 38%, p < 0.01) decrease in perceived cube size. These

findings add new insights to our understanding of how eye height and IPD

manipulations can affect peoples' perception of scale in highly realistic

immersive VR scenarios.

|

Assessing the Relevance of Eye Gaze Patterns During Collision Avoidance in Virtual Reality, Kamala Varma, Stephen J. Guy and Victoria Interrante (2017) ICAT-EGVE International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments, pp. 149-152. [Best Short Paper]

[PDF]

[abstract]

|

To increase presence in virtual reality environments requires a meticulous'

imitation of human behavior in virtual agents. In the specific case of collision

avoidance, agents' interaction will feel more natural if they are able to both

display and respond to non-verbal cues. This study informs their behavior by

analyzing participants' reaction to nonverbal cues. Its aim is to confirm

previous work that shows head orientation to be a primary factor in collision

avoidance negotiation, and to extend this to investigate the additional

contribution of eye gaze direction as a cue. Fifteen participants were directed

to walk towards an oncoming agent in a virtual hallway, who would exhibit

various combinations of head orientation and eye gaze direction based cues.

Closely prior to the potential collision the display turned black and the

participant had to move in avoidance of the agent as if she were still present.

Meanwhile, their own eye gaze was tracked to identify where their focus was

directed and how it related to their response. Results show that the natural

tendency was to avoid the agent by moving right. However, participants showed a

greater compulsion to move leftward if the agent cued her own movement to the

participant's right, whether through head orientation cues (consistent with

previous work) or through eye gaze direction cues (extending previous work). The

implications of these findings are discussed.

|

Impact of Visual and Experiential Realism on Distance Perception in VR using a Custom Video See-Through System, Koorosh Vaziri, Peng Liu, Sahar Aseeri and Victoria Interrante (2017) ACM Symposium on Applied Perception.

[PDF]

[abstract]

|

Immersive virtual reality (VR) technology has the potential to play

an important role in the conceptual design process in architecture,

if we can ensure that sketch-like structures are able to afford an accurate

egocentric appreciation of the scale of the interior space of a

preliminary building model. Historically, it has been found that people

tend to perceive egocentric distances in head-mounted display

(HMD) based virtual environments as being shorter than equivalent

distances in the real world. Previous research has shown that in

such cases, reducing the quality of the computer graphics does not

make the situation significantly worse. However, other research

has found that breaking the illusion of reality in a compellingly

photorealistic VR experience can have a significant negative impact

on distance perception accuracy.

In this paper, we investigate the impact of graphical realism on

distance perception accuracy in VR from a novel perspective. Rather

than starting with a virtual 3D model and varying its surface texture,

we start with a live view of the real world, presented through a

custom-designed video/optical-see-through HMD, and apply image

processing to the video stream to remove details. This approach

offers the potential to explore the relationship between visual and

experiential realism in a more nuanced manner than has previously

been done. In a within-subjects experiment across three different

real-world hallway environments, we asked people to perform blind

walking to make distance estimates under three different viewing

conditions: real-world view through the HMD; closely registered

camera views presented via the HMD; and Sobel-filtered versions

of the camera views, resulting a sketch-like (NPR) appearance. We

found: 1) significant amounts of distance underestimation in all

three conditions, most likely due to the heavy backpack computer

that participants wore to power the HMD and cameras/graphics;

2) a small but statistically significant difference in the amount of

underestimation between the real world and camera/NPR viewing

conditions, but no significant difference between the camera

and NPR conditions. There was no significant difference between

participants' ratings of visual and experiential realism in the real

world and camera conditions, but in the NPR condition participants'

ratings of experiential realism were significantly higher than their

ratings of visual realism. These results confirm the notion that experiential

realism is only partially dependent on visual realism, and

that degradation of visual realism, independently of experiential

realism, does not significantly impact distance perception accuracy

in VR.

|

Predicting Destination using Head Orientation and Gaze Direction During Locomotion in VR, Jonathan Gandrud and Victoria Interrante (2016) ACM Symposium on Applied Perception, pp. 31-38.

[PDF]

[abstract]

|

This paper reports preliminary investigations into the extent to which future directional intention might be reliably inferred from head

pose and eye gaze during locomotion. Such findings could help inform the more effective implementation of realistic detailed animation

for dynamic virtual agents in interactive first- person crowd simulations in VR, as well as the design of more efficient predictive

controllers for redirected walking. In three different studies, with a total of 19 participants, we placed people at the base of a

T-shaped virtual hallway environment and collected head position, head orientation, and gaze direction data as they set out to perform a

hidden target search task across two rooms situated at right angles to the end of the hallway. Subjects wore an nVisorST50 HMD equipped

with an Arrington Research ViewPoint eye tracker; positional data were tracked using a 12- camera Vicon MX40 motion capture system. The

hidden target search task was used to blind participants to the actual focus of our study, which was to gain insight into how effectively

head position, head orientation and gaze direction data might predict people's eventual choice of which room to search first. Our results

suggest that eye gaze data does have the potential to provide additional predictive value over the use of 6DOF head tracked data alone,

despite the relatively limited field-of-view of the display we used.

|

Towards Achieving Robust Video Self-avatars under Flexible Environment Conditions, Loren Puchalla Fiore and Victoria Interrante (2012) International Journal of Virtual Reality (special issue featuring papers from the IEEE VR Workshop on Off-The-Shelf Virtual Reality), 11(3), pp. 33-41.

[PDF]

[abstract]

|

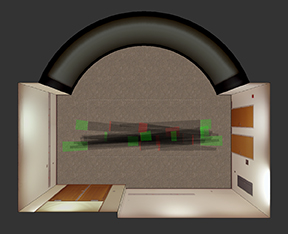

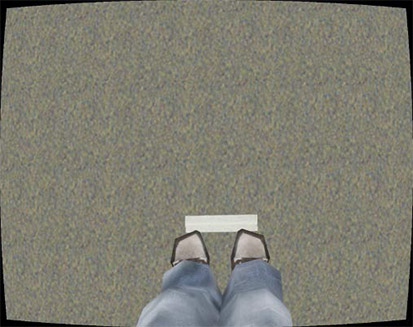

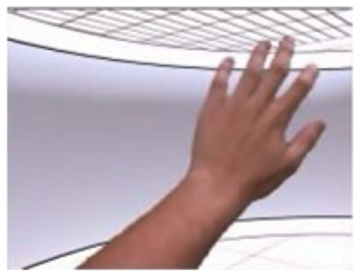

The user's sense of presence within a virtual environment is very important as it affects their propensity to experience the virtual world as if it were real. A common method of immersion is to use a head-mounted display (HMD) which gives the user a stereoscopic view of the virtual world encompassing their entire field of vision. However, the disadvantage to using an HMD is that the user's view of the real world is completely blocked including the view of his or her own body, thereby removing any sense of embodiment in the virtual world. Without a body, the user is left feeling that they are merely observing a virtual world, rather than experiencing it. We propose

using a video-based see-thru HMD (VSTHMD) to capture video of the view of the real-world and then segment the user's body from that video and composite it into the virtual environment. We have developed a VSTHMD using commercial-off-the-shelf components, and have developed a preliminary algorithm to segment and composite the user's arms and hands. This algorithm works by building probabilistic models of the appearance of the room within which the VSTHMD is used, and the user's body. These are then used to classify input video pixels in real-time into foreground and background layers. The approach has promise, but additional measures need to be taken to more robustly handle situations in which the background contains skin-colored objects such as wooden doors. We propose several methods to eliminate such false positives, and discuss the initial results of using the 3D data from a Kinect to identify false positives.

|

Correlations Between Physiological Response, Gait, Personality, and Presence in Immersive Virtual Environments, Lane Phillips, Victoria Interrante, Michael Kaeding, Brian Ries and Lee Anderson (2012) Presence: Teleoperators and Virtual Environments 21(3), Spring 2012, pp. 119-141.

[PDF]

[abstract]

|

In previous work, we have found significant differences in the accuracy with which people make initial spatial judgments in different types of head-mounted, display-based immersive virtual environments (IVEs; Phillips, Interrante, Kaeding, Ries, & Anderson, 2010). In particular, we have found that people tend to less severely underestimate egocentric distances in a virtual environment that is a photorealistic replica of a real place that they have recently visited than when the virtual environment is either a photorealistic replica of an unfamiliar place, or a nonphotorealistically (NPR) portrayed version of a familiar space. We have also noted significant differences in the effect of environment type on distance perception accuracy between individual participants. In this paper, we report the results of two experiments that seek further insight into these phenomena, focusing on factors related to depth of presence in the virtual environment.

In our reported first experiment, we immersed users (between-subjects) in one of the three different types of IVEs and asked them to perform a series of well-defined tasks along a delimited path, first in a control version of the environment, and then in a stressful variant in which the floor around the marked path was cut away to reveal a 20-ft drop. We assessed participants' sense of presence during each trial using a diverse set of measures, including: questionnaires, recordings of heart rate and galvanic skin response, and gait metrics derived from tracking data.

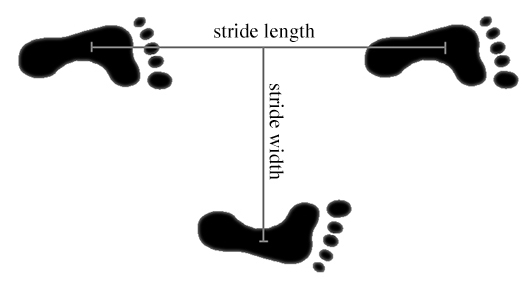

We computed the differences in each of these measures between the stressful and nonstressful versions of each environment, and then compared the changes due to stress between the different virtual environment conditions. Pooling the data over all participants in each group, we found significant physiological indications of stress after the appearance of the pit in all three environments, but we did not find significant differences in the magnitude of the stress response between the different virtual environment locales. We also did not find any significant difference in the level of subjective presence reported in each environment. However, we did find significant differences in gait: participants in the photorealistic replica room showed a significantly greater reduction in stride speed and stride length between the control and pit version of the room than did participants in either the photorealistically rendered nonreplica environment or the NPR replica environment conditions. Our second experiment, conducted with a new set of participants,

sought to more directly investigate potential correlations between distance estimation accuracy and personality, stress response, and reported sense of presence, comparatively across different immersive virtual environment conditions. We used pretest questionnaires to assess a variety of personality measures, and then randomly immersed participants (between-subjects) in either the photorealistic replica or photorealistic non-replica environment and assessed the accuracy of their egocentric distance judgments in that IVE, followed by control trials in a neutral, real-world location. We then had participants go through the same set of tasks as in our first experiment while we collected physiological measures of their stress level and tracked their gait, and we compared the changes in these measures between the neutral and pit-enhanced versions of the environment. Finally, we had people fill out a brief presence questionnaire.Analyzing all of these data, we found that participants made significantly greater distance estimation errors in the unfamiliar room environment than in the replica room environment, but no other differences between the two environments were significant. We found significant positive correlation between several of the personality measures, but we did not find any notable significant correlations between personality and presence, or between either personality or presence and gait changes or distance estimation accuracy. These results suggest to us that the relationship between personality, presence, and performance in IVEs is complicated and not easily captured by existing measures.

|

Avatar Self-Embodiment Enhances Distance Perception Accuracy in Non-Photorealistic Immersive Virtual Environments, Lane Phillips, Brian Ries, Michael Kaeding and Victoria Interrante (2010) IEEE Virtual Reality 2010, pp. 115-118.

[PDF]

[abstract]

|

Non-photorealistically rendered (NPR) immersive virtual environments (IVEs) can facilitate conceptual design in architecture by enabling preliminary design sketches to be previewed and experienced at full scale, from a first-person perspective. However, it is critical to ensure the accurate spatial perception of the represented information, and many studies have shown that people typically underestimate distances in most IVEs, regardless of rendering style. In previous work we have found that while people tend to judge distances more accurately in an IVE that is a high-fidelity replica of their concurrently occupied real environment than in an IVE that it is a photorealistic representation of a real place that they've never been to, significant distance

estimation errors re-emerge when the replica environment is represented in a NPR style. We have also previously found that distance estimation accuracy can be improved, in photo-realistically rendered novel virtual environments, when people are given a fully tracked, high fidelity first person avatar self-embodiment. In this paper we report the results of an experiment that seeks to determine whether providing users with a high-fidelity avatar self-embodiment in a NPR virtual replica environment will enable them to perceive the 3D spatial layout of that environment more accurately. We find that users who are given a first person avatar in an NPR replica environment

judge distances more accurately than do users who experience the NPR replica room without an embodiment, but not as accurately as users whose distance judgments are made in a photorealistically rendered virtual replica room. Our results provide a partial solution to the problem of facilitating accurate distance perception in NPR virtual environments, while supporting and expanding the scope of previous findings that giving people a realistic avatar self-embodiment in an IVE can help them to interpret what they see through an HMD in a way that is more similar to how they would interpret a corresponding visual stimulus in the real world.

|

A Further Assessment of Factors Correlating with Presence in Immersive Virtual Environments, Lane Phillips, Victoria Interrante, Michael Kaeding, Brian Ries and Lee Anderson (2010) Joint Virtual Reality Conference of EGVE - EuroVR - VEC, pp. 55-63.

[PDF]

[abstract]

|

In previous work, we have found significant differences in participants' distance perception accuracy in different types of immersive virtual environments (IVEs). Could these differences be an indication of, or consequence of, differences in participants' sense of presence under these different virtual environment conditions? In this paper, we report the results of an experiment

that seeks further insight into this question.

In our experiment, users were fully tracked and immersed in one of three different IVEs: a photorealistically rendered replica of our lab, a non-photorealistically rendered replica of our lab, or a photorealistically rendered room that had similar dimensions as our lab, but was texture mapped with photographs from a different real place. Participants in each group were asked to perform a series of tasks, first in a normal (control) version of the IVE and then in a stress-enhanced version in which the floor surrounding the marked path was cut away to reveal a two-story drop. We assessed participants' depth of presence in each of these IVEs using a

questionnaire, recordings of heart rate and galvanic skin response, and gait metrics derived from tracking data, and then compared the differences between the stressful and non-stressful versions of each environment. Pooling the data over all participants in each group, we found significant

physiological indications of stress after the appearance of the pit in all three environments, but did not find significant differences in the magnitude of the physiological stress response between

the different environment conditions. However, we did find significant differences in the change in gait: participants in the photorealistic replica room group walked significantly slower, and with shorter strides, after exposure to the stressful version of the environment, than did participants in either the photorealistically rendered unfamiliar room or the NPR replica room conditions.

|

Gait Parameters in Stressful Virtual Environments , Lane Phillips, Brian Ries, Michael Kaeding and Victoria Interrante (2010) IEEE VR 2010 Workshop on Perceptual Illusions in Virtual Environments, pp. 19-22.

[PDF]

[abstract]

|

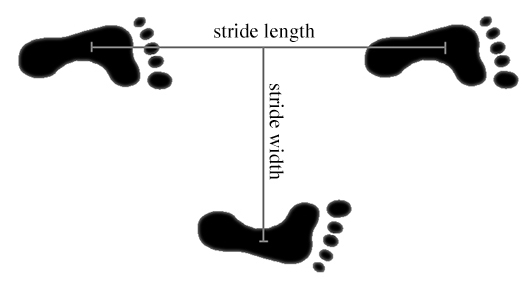

We share the results of a preliminary experiment where participants performed a simple task in a control immersive virtual environment (IVE) followed by a stressful IVE. Participants' gaits were recorded with a motion capture system. We computed speed, stride length, and stride width for each participant and found that participants take significantly shorter strides in the stressful environment, but stride width and walking speed do not show a significant difference. In a future experiment we will continue to study how gait parameters relate to a user's experience of a virtual environment. We hope to find parameters that can be used as metrics for comparing a user's level of presence in different virtual environments.

|

Distance Perception in NPR Immersive Virtual Environments, Revisited, Lane Phillips, Brian Ries, Michael Kaeding and Victoria Interrante (2009) ACM/SIGGRAPH Symposium on Applied Perception in Graphics and Visualization, pp. 11-14.

[PDF]

[abstract]

|

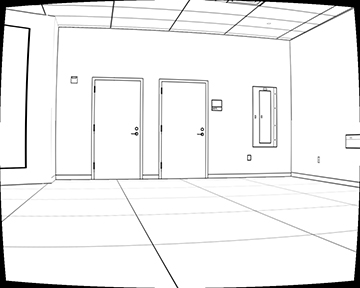

Non-photorealistic rendering (NPR) is a representational technique that allows communicating the essence of a design while giving the viewer the sense that the design is open to change. Our research aims to address the question of how to effectively use non-photorealistic rendering in immersive virtual environments to enable the intuitive exploration of early architectural design concepts at full scale. Previous studies have shown that people typically underestimate egocentric distances in immersive virtual environments, regardless of rendering style, although we have recently found that distance estimation errors are minimized in the special case that the virtual

environment is a high-fidelity replica of a real environment that the viewer is presently in or has recently been in. In this paper we re-examine the impact of rendering style on distance perception accuracy in this virtual environments context. Specifically, we report the results of an experiment that seeks to assess the accuracy with which people judge distances in a non-photorealistically rendered virtual environment that is a directly-derived stylistic abstraction of the actual environment that they are currently in. Our results indicate that people tend to underestimate distances to a significantly greater extent in a co-located virtual environment when it is rendered using a line-drawing style than when it is rendered using high fidelity textures derived from photographs.

|

Investigating the Physiological Effects of Self-Embodiment in Stressful Virtual Environments, Brian Ries, Victoria Interrante, Cassandra Ichniowski and Michael Kaeding (2009) IEEE VR 2010 Workshop on Perceptual Illusions in Virtual Environments, pp. 176-198.

[PDF]

[abstract]

|

In this paper we explore the benefits that self-embodied virtual avatars provide to a user’s sense of presence while wearing a headmounted display in a immersive virtual environment (IVE). Recent work has shown that providing a user with a virtual avatar can increase their performance when completing tasks such as ego-centric distance judgment. The results of this research imply that a heightened sense of presence is responsible for the improvement. However, there is an ambiguity in interpreting the results. Are users merely gaining additional scaling information of

their environment by using the representation of their body as a metric, or is the virtual avatar heightening their sense of presence and increasing their accuracy? To investigate this question, we conducted a between-subjects design experiment to analyze any physiological differences between users given virtual avatars versus ones that were not. If the virtual avatars are increasing a user's sense of presence, their physiological data should indicate a higher level of stress when presented with a stressful environment.

|

Analyzing the Effect of a Virtual Avatar's Geometric and Animation Fidelity on Ego-centric Spatial Perception in Immersive Virtual Environments , Brian Ries, Michael Kaeding, Lane Phillips and Victoria Interrante (2009) ACM Symposium on Virtual Reality Software and Technology, pp. 59-66.

[PDF]

[abstract]

|

Previous work has shown that giving a user a first-person virtual avatar can increase the accuracy of their egocentric distance judgments in an immersive virtual environment (IVE). This result provides one of the rare examples of a manipulation that can enable improved spatial task performance in a virtual environment without potentially compromising the ability for accurate information transfer to the real world. However, many open questions about the scope and limitations of the effectiveness of IVE avatar self-embodiment remain. In this paper, we report the results of a series of four experiments, involving a total of 40 participants, that explore the

importance, to the desired outcome of enabling enhanced spatial perception accuracy, of providing a high level of geometric and motion fidelity in the avatar representation. In these studies, we assess participants' abilities to estimate egocentric distances in a novel virtual environment under four different conditions of avatar self-embodiment: a) no avatar; b) a fully tracked, custom-fitted, high fidelity avatar, represented using a textured triangle mesh; c) the same avatar as in b) but implemented with single point rather than full body tracking; and d) a fully tracked

but simplified avatar, represented by a collection of small spheres at the raw tracking marker locations. The goal of these investigations is to attain insight into what specific characteristics of a virtual avatar representation are most important to facilitating accurate spatial perception, and what cost-saving measures in the avatar implementation might be possible. Our results indicate that each of the simplified avatar implementations we tested is significantly less effective than the full avatar in facilitating accurate distance estimation; in fact, the participants who were

given the simplified avatar representations performed only marginally (but not significantly) more accurately than the participants who were given no avatar at all. These findings suggest that the beneficial impact of providing users with a high fidelity avatar self-representation may stem less directly from the low-level size and motion cues that the avatar embodiment makes available to them than from the cognitive sense of presence that the self-embodiment supports.

|

Transitional Environments Enhance Distance Perception in Immersive Virtual Reality Systems, Frank Steinicke, Gerd Bruder, Klaus Hinrichs, Markus Lappe, Brian Ries and Victoria Interrante (2009) ACM/SIGGRAPH Symposium on Applied Perception in Graphics and Visualization, pp. 19-26.

[PDF]

[abstract]

|

Several experiments have provided evidence that ego-centric distances are perceived as compressed in immersive virtual environments relative to the real world. The principal factors responsible for this phenomenon have remained largely unknown. However, recent experiments suggest that when the virtual environment (VE) is an exact replica of a user's real physical surroundings, the person's distance perception improves. Furthermore, it has been shown that when users start

their virtual reality (VR) experience in such a virtual replica and then gradually transition to a different VE, their sense of presence in the actual virtual world increases significantly. In this case the virtual replica serves as a transitional environment between the real and virtual world.

In this paper we examine whether a person's distance estimation skills can be transferred from a transitional environment to a different VE. We have conducted blind walking experiments to analyze if starting the VR experience in a transitional environment can improve a person's ability to estimate distances in an immersive VR system. We found that users significantly

improve their distance estimation skills when they enter the virtual world via a transitional environment.

|

Elucidating Factors that can Facilitate Veridical Spatial Perception in Immersive Virtual Environments, Victoria Interrante, Brian Ries, Jason Lindquist, Michael Kaeding and Lee Anderson (2008) Presence: Teleoperators and Virtual Environments 17(2), April 2008, pp. 176-198.

[PDF]

[abstract]

|

Ensuring veridical spatial perception in immersive virtual environments (IVEs) is an important yet elusive goal. In this paper, we present the results of two experiments that seek further insight into this problem. In the first of these experiments, initially reported in Interrante, Ries, Lindquist, and Anderson (2007), we seek to disambiguate two alternative hypotheses that could explain our recent finding (Interrante, Anderson, and Ries, 2006a) that participants appear not to significantly underestimate egocentric distances in HMD-based IVEs, relative to in the real world,

in the special case that they unambiguously know, through first-hand observation, that the presented virtual environment is a high-fidelity 3D model of their concurrently occupied real environment. Specifically, we seek to determine whether people are able to make similarly veridical judgments of egocentric distances in these matched real and virtual environments because (1) they are able to use metric information gleaned from their exposure to the real environment to calibrate their judgments of sizes and distances in the matched virtual environment, or because (2) their prior exposure to the real environment enabled them to achieve a heightened sense of presence in

the matched virtual environment, which leads them to act on the visual stimulus provided through the HMD as if they were interpreting it as a computer-mediated view of an actual real environment, rather than just as a computer-generated picture, with all of the uncertainties that that would imply. In our second experiment, we seek to investigate the extent to which augmenting a virtual environment model with faithfully-modeled replicas of familiar objects might enhance people's ability to make accurate judgments of egocentric distances in that environment.

|

The Effect of Self-Embodiment on Distance Perception in Immersive Virtual Environments, Brian Ries, Victoria Interrante, Michael Kaeding and Lee Anderson (2008) ACM Symposium on Virtual Reality Software and Technology, pp. 167-170.

[PDF]

[abstract]

|

Previous research has shown that egocentric distance estimation suffers from compression in virtual environments when viewed through head mounted displays. Though many possible variables and factors have been investigated, the source of the compression is yet to be fully realized. Recent experiments have hinted in the direction of an unsatisfied feeling of presence being the cause. This paper investigates this presence hypothesis by exploring the benefit of providing self-embodiment to the user through the form of a virtual avatar, presenting an experiment comparing errors in egocentric distance perception through direct-blind walking between subjects with a virtual avatar and without. The result of this experiment finds a significant improvement with egocentric distance estimations for users equipped with a virtual avatar over those without.

|

Elucidating Factors that can Facilitate Veridical Spatial Perception in Immersive Virtual Environments, Victoria Interrante, Brian Ries, Jason Lindquist and Lee Anderson (2007) IEEE Virtual Reality, pp. 11-17.

[PDF]

[abstract]

|

Enabling veridical spatial perception in immersive virtual environments (IVEs) is an important yet elusive goal, as even the factors implicated in the often-reported phenomenon of apparent distance compression in HMD-based IVEs have yet to be satisfactorily elucidated. In recent experiments , we have found that participants appear less prone to significantly underestimate egocentric distances in HMD-based IVEs, relative to in the real world, in the special case that

they unambiguously know, through first-hand observation, that the presented virtual environment is a high fidelity 3D model of their concurrently occupied real environment. We had hypothesized that this increased veridicality might be due to participants having a stronger sensation of ‘presence’ in the IVE under these conditions of co-location, which state of mind leads them to act on their visual input in the IVE similarly as they would in the real world (the presence hypothesis). However, alternative hypotheses are also possible. Primary among these is the visual calibration hypothesis: participants could be relying on metric information gleaned from their exposure to the

real environment to calibrate their judgments of sizes and distances in the matched virtual environment. It is important to disambiguate between the presence and visual calibration hypotheses because they suggest different directions for efforts to facilitate veridical distance perception in general (non-co-located) IVEs. In this paper, we present the results of an experiment that seeks novel insight into this question. Using a mixed within- and between-subjects design, we compare participants’ relative ability to accurately estimate egocentric distances in three different virtual environment models: one that is an identical match to the occupied real environment; one in which each of the walls in our virtual room model has been surreptitiously moved ~10% inward towards the center of the room; and one in which each of the walls has been surreptitiously moved ~10% outwards from the center of the room. If the visual calibration hypothesis holds, then we should expect to see a degradation in the accuracy of peoples’ distance judgments in the

surreptitiously modified models, manifested as an underestimation of distances when the IVE is actually larger than the real room and as an overestimation of distances when the IVE is smaller. However, what we found is that distances were significantly underestimated in the virtual environment relative to in the real world in each of the surreptitiously modified room environments, while remaining reasonably accurate (consistent with our previous findings) in the case of the faithfully size-matched room environment. In a post-test survey, participants in each of the three room size conditions reported equivalent subjective levels of presence and did not indicate any overt awareness of the room size manipulation.

|

Distance Perception in Immersive Virtual Environments, Revisited, Victoria Interrante, Lee Anderson and Brian Ries (2006) IEEE Virtual Reality, pp. 3-10.

[PDF]

[abstract]

|

Numerous previous studies have suggested that distances appear to be compressed in immersive virtual environments presented via head mounted display systems, relative to in the real world. However, the principal factors that are responsible for this phenomenon have remained largely unidentified. In this paper we shed some new light on this intriguing problem by reporting the results of two recent experiments in which we assess egocentric distance perception in a high

fidelity, low latency, immersive virtual environment that represents an exact virtual replica of the participant's concurrently occupied real environment. Under these novel conditions, we make the startling discovery that distance perception appears not to be significantly compressed in the immersive virtual environment, relative to in the real world.

Selected Posters

|

A Little Unreality in a Realistic Replica Environment Degrades Distance Estimation Accuracy, Lane Phillips and Victoria Interrante (2011) IEEE Virtual Reality, pp. 235-236.

[PDF]

[poster]

[abstract]

|

Users of IVEs typically underestimate distances during blind walking tasks, even though they are accurate at this task in the real world. The cause of this underestimation is still not known. Our previous work found an exception to this effect: When the virtual environment was a realistic, co-located replica of the concurrently occupied real environment, users did not significantly underestimate distances. However, when the replica was rendered in an NPR style, we found that

users underestimated distances. In this study we explore whether the inaccuracy in distance estimation could be due to lack of size and distance cues in our NPR IVE, or if it could be due to a lack of presence. We ran blind walking trials in a new replica IVE that combined features of the previous two IVEs. Participants significantly underestimated distances in this environment.

|

Lack of 'Presence' May be a Factor in the Underestimation of Egocentric Distances in Immersive Virtual Environments, Victoria Interante, Lee Anderson and Brian Ries (2005) Journal of Vision [Abstract], 5(8): 527a.

[poster]

[abstract]

|

We report the results of a study intended to investigate the possibility that cognitive dissonance in ‘presence’ may play a role in the widely reported phenomenon of underestimation of egocentric distances in immersive virtual environments. In this study, we compare the accuracy of egocentric distance estimates, obtained via direct blind walking, across two cognitively different immersion conditions: one in which the presented virtual environment consists of a perfectly registered, high fidelity 3D model of the same space in which the user is physically located, and

one in which the presented virtual environment is a high-fidelity 3D model of a different real space. In each space, we compare distance estimates obtained in the immersive virtual environment with distance estimates obtained in the corresponding physical environment. We also compare distance perception accuracy across two different exposure conditions: one in which the participant experiences the virtual space before s/he is exposed to the real space, and one in which the participant experiences the real space first. We find no significant difference in the accuracy of

distance estimates obtained in the real vs. virtual environments when the virtual environment represents the same space as the occupied real environment, regardless of the order of exposure, but, consistent with previously reported findings by others, we find that distances are significantly underestimated in the virtual world, relative to the real world, when the virtual

world represents a different place than the occupied real world. In the case of the non-co-located environment only, we also find a significant effect of previous experience in the represented space, i.e. participants who complete the experiment in the real world first exhibit less distance underestimation in the corresponding virtual environment than do participants who complete the experiment in the virtual world first.