Abstract

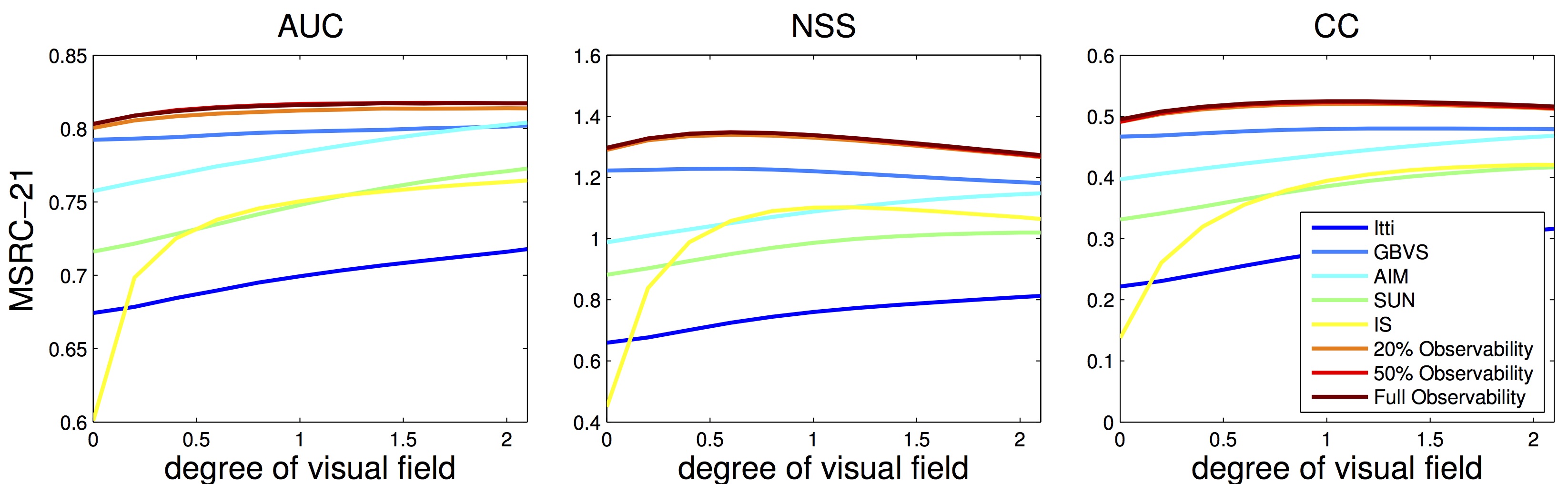

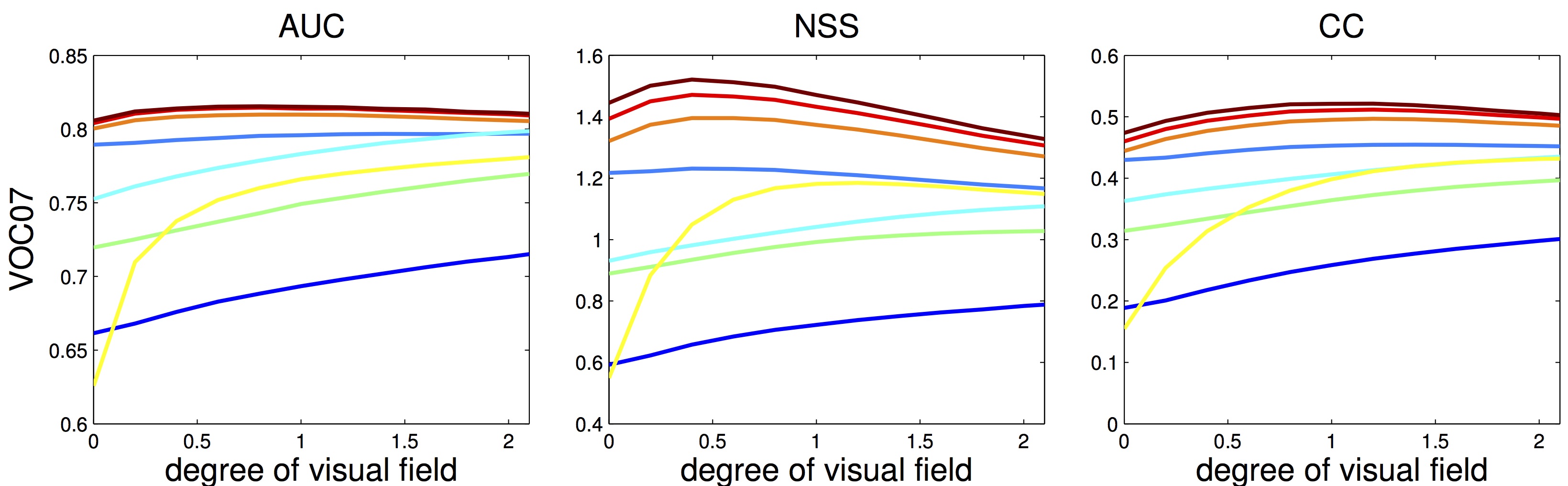

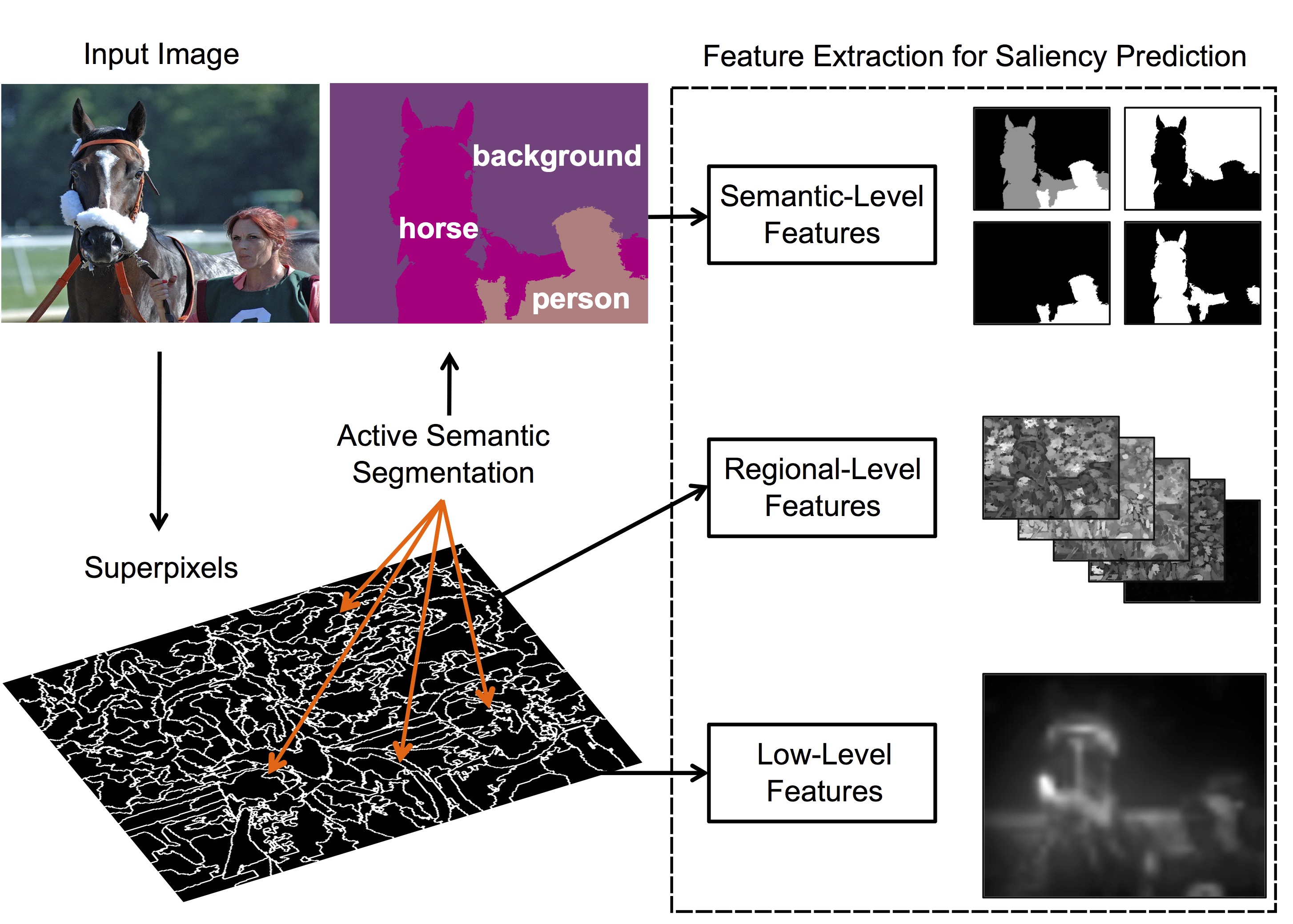

Semantic-level features have been shown to provide a strong cue for predicting eye fixations. They are usually implemented by evaluating object classifiers everywhere in the image. As a result, extracting the semantic-level features may become a computational bottleneck that may limit the applicability of saliency prediction in real-time applications. In this work, to reduce the computational cost at the semantic level, we introduce a saliency prediction model based on active semantic segmentation, where a set of new features are extracted during the progressive extraction of the semantic labeling. We recorded eye fixations on all the images of the popular MSRC-21 and VOC07 datasets. Experiments in this new dataset demonstrate that the semantic-level features extracted from active semantic segmentation improve the saliency prediction from low- and regional-level features, and it allows controlling the computational overhead of adding semantics to the saliency predictor.

Resources

Paper

Ming Jiang, Xavier Boix, Juan Xu, Gemma Roig, Luc Van Gool, and Qi Zhao, "Saliency Prediction with Active Semantic Segmentation,"

in British Machine Vision Conference (BMVC), 2015 [pdf]

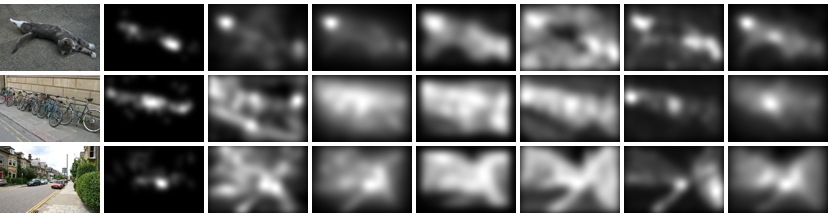

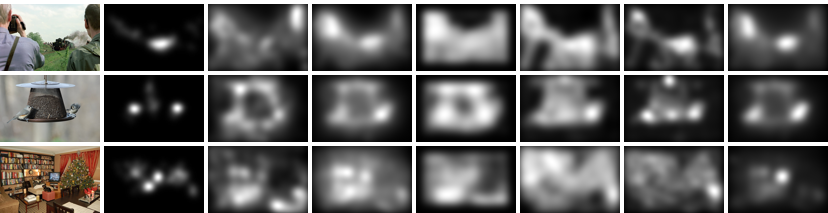

Images and Semantic Labels

Eye-tracking Data

Results