Abstract

Previous works have suggested the role of scene information in directing gaze. The structure of a scene provides global contextual information that complements local object information in saliency prediction. In this study, we explore how scene envelopes such as openness, depth and perspective affect visual attention in natural outdoor images. To facilitate this study, an eye tracking dataset is first built with 500 natural scene images and eye tracking data with 15 subjects free-viewing the images. We make observations on scene layout properties and propose a set of scene structural features relating to visual attention. Multiple kernel learning (MKL) is used as the computational module to integrate features at both low- and high-levels. Experimental results demonstrate that our model outperforms existing methods and our scene structural features can improve the performance of other saliency models in outdoor scenes.

Resources

Manuscript: Haoran Liang, Ming Jiang, Ronghua Liang and Qi Zhao, "Saliency Prediction with Scene Structural Guidance". [pdf][bib]

Code: Github Code for low and structural level feature computation, model training with MKL and saliency prediction.

Dataset: Image Stimuli (35M) Eye Tracking Data (1M).

Dataset

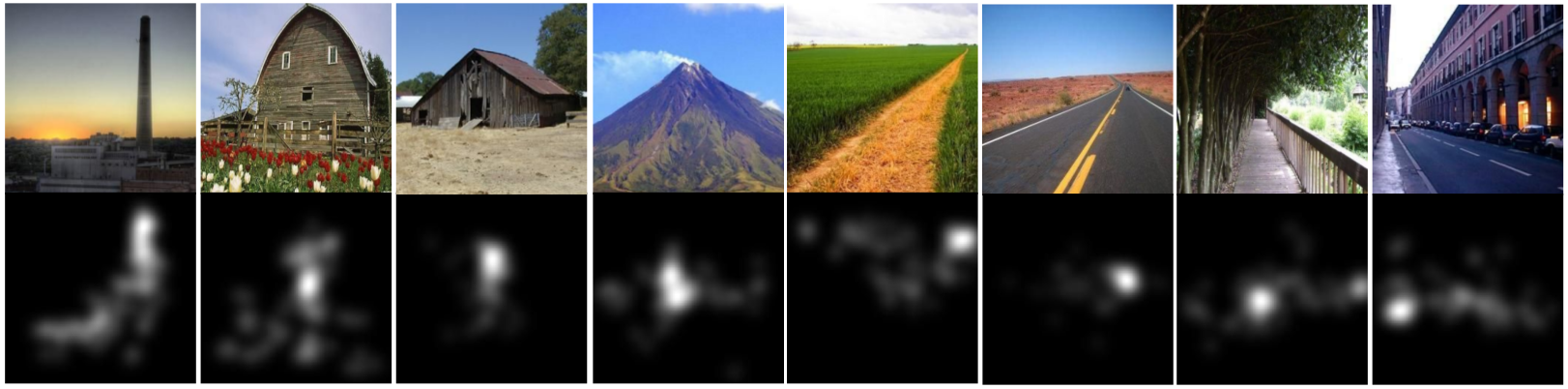

Examples

Analysis and Computational Model

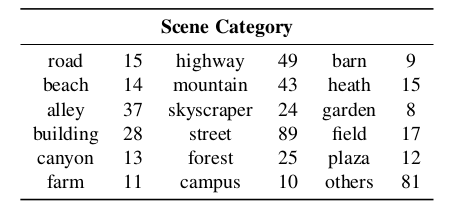

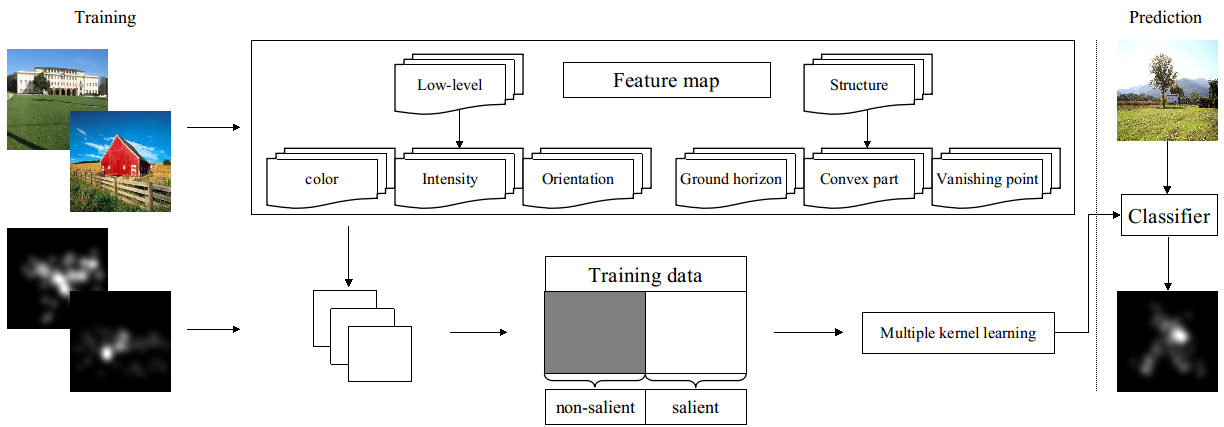

Three main factors including (a) the horizontal line, (b) the convex parts and (c) the vanishing point are shown in the yellow boxes. These factors encode the scene structure and complement bottom-up features that highlight regions with contrast.

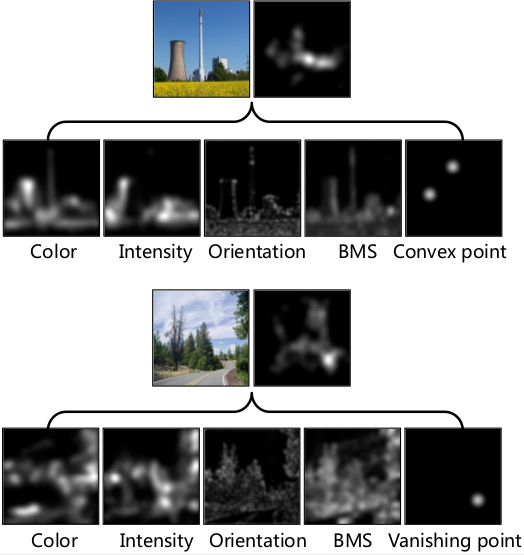

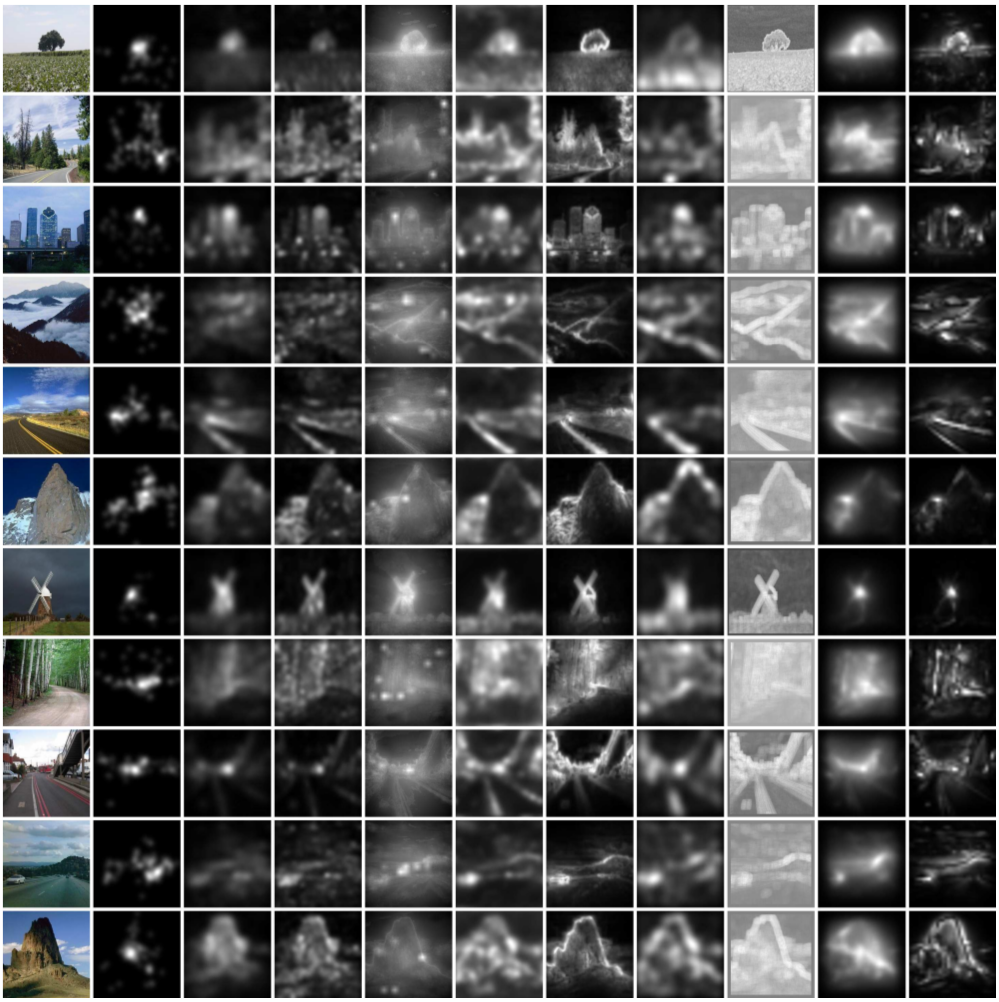

Illustration of feature maps

The flowchart of the proposed method for saliency prediction

Results

Qualitative Comparisons

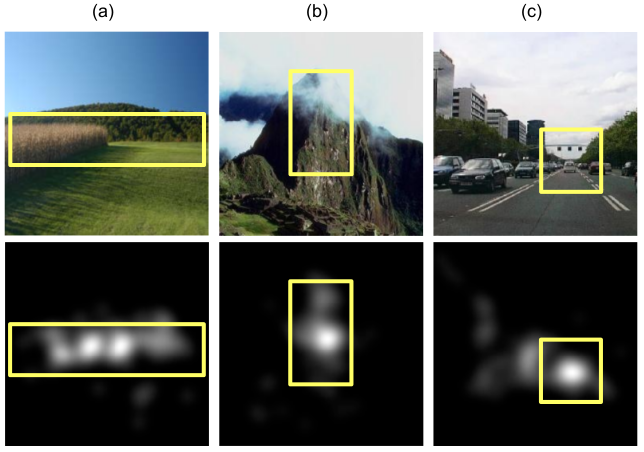

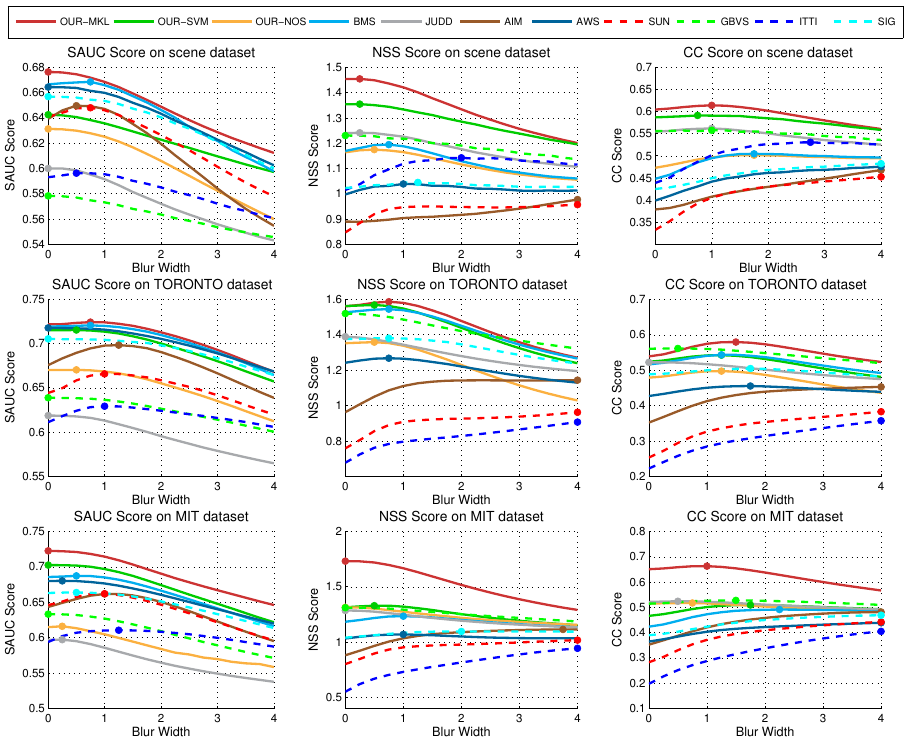

The results of proposed model and all the state-of-the-art models over our scene dataset.

Performance

The Shuffled AUC, CC and NSS scores tested on proposed scene dataset, Toronto and MIT subset. The curves indicate the average performance over all stimuli. The blur width is parameterized by a Gaussians standard deviation in image widths.