Satellite Images and Climate Change

Jonathan Rogness

University of Minnesota

What is a Satellite Image?

Satellites have sensors which respond to light power.

- Increased Light ⇒ Increased Voltage

- Analog voltage converted to a digital number (DN), usually 0-255 or 0-1023.

Different sensors respond to different kinds of light: red, green, blue, infrared light...

Different Satellites

LandSat: carries two different "cameras."

- MSS: 4 sensors

- TM: 7 sensors

AVHRR (Advanced Very High Resolution Radiometer)

- 4-6 sensors

Spatial Resolutions

The spatial resolution of a sensor is the size of the smallest detectable feature.

- LandSat MSS: ~ 80m

- LandSat TM: ~ 30m

- AVHRR: 1km

LandSat: images have more detail, but the data sets are huge. Coverage only repeats every 18 days.

AVHRR: images can have daily coverage and are more reasonable for large projects, but can't detect small features.

Problems with Large Resolutions

If your satellite has a large spatial resolution, you run the risk of your pixels including so many things that it's meaningless!

From DNs to Images

The light measurements can be displayed as a grayscale image, where 0 is black and 255 is White.

Otherwise we create "color composite" images.

Monitoring Climate Change

Satellite images can monitor environmental changes in a number of ways, including:

- Overhead pictures

- Tracking health of vegetation

- Tracking types of vegetation and land use

True Color Composites

|

|

False Color Composites

|

|

Vegetation

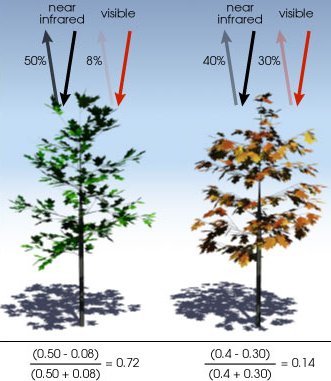

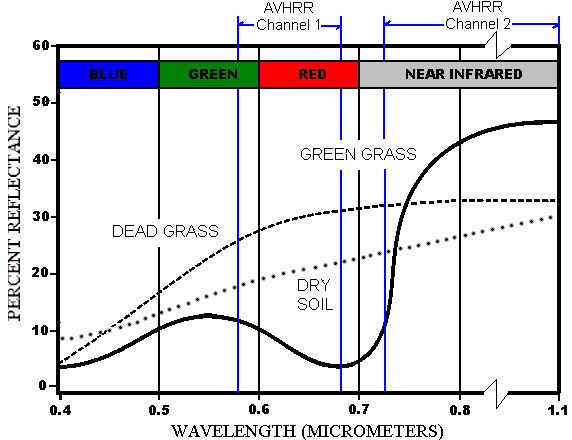

Healthy vegetation tends to absorb visible red light (AVHRR Ch1) but reflect NIR (AVHRR Ch 2). This leads to NDVI:

| N | ormalized |

| D | ifference in |

| V | egetation |

| I | ndex |

Definition. NDVI of a pixel = (NIR - Red) / (NIR + Red).

NDVI

|

|

Land Cover Classification

This has been done globally on a 1km scale with AVHRR data, and a 30m scale in the US with LandSat TM data. There are two ways to classify pixels, supervised and unsupervised.

Supervised Classification

Idea: use previous knowledge to help the computer recognize certain spectral signatures.

Cloud Detection

Harder than you'd think!

There are too many cloud types to reliably recognize every "cloud spectral signature;" Besides, we can't do LCC for every pixel ever beamed down. We need fast run-time cloud detection techniques.

We tend to use quick "threshold tests." If a pixel fails, say, 3 out of 5 tests, we say it's a cloud.

CLouds from AVhRr (CLAVR)

Includes tests like:

- RGC: is Ch1 > 44% ?

- RR: is 0.9 < (Ch2/Ch1) < 1.1 ?

- TGC: is "temperature" < 249K ?

Cloud edges are hard to detect, so CLAVR is often done on a 2x2 grid.

- 0 pixels cloud: all 4 "clear"

- 1-3 pixels cloud: all 4 "mixed"

- 4 pixels cloud: all 4 "cloud"