Abstract

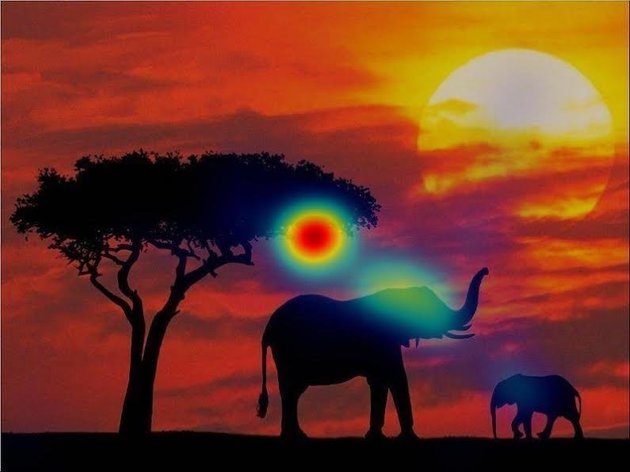

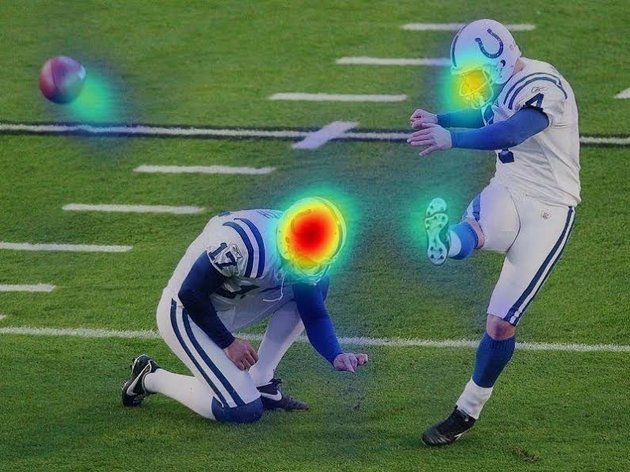

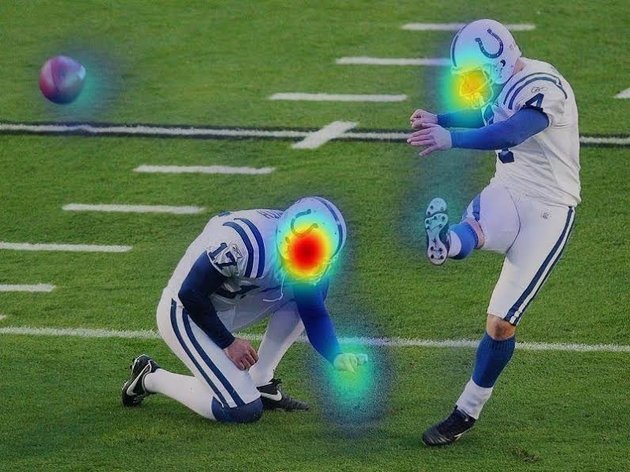

The social difficulties that are a hallmark of autism spectrum disorder (ASD) are thought to arise, at least in part, from atypical attention toward stimuli and their features. To investigate this hypothesis comprehensively, we characterized 700 complex natural scene images with a novel three-layered saliency model that incorporated pixel-level (e.g., contrast), object-level (e.g., shape), and semantic-level attributes (e.g., faces) on 5,551 annotated objects. Compared with matched controls, people with ASD had a stronger image center bias regardless of object distribution, reduced saliency for faces and for locations indicated by social gaze, and yet a general increase in pixel-level saliency at the expense of semantic-level saliency. These results were further corroborated by direct analysis of fixation characteristics and investigation of feature interactions. Our results for the first time quantify atypical visual attention in ASD across multiple levels and categories of objects.

Highlights

- A novel three-layered saliency model with 5,551 annotated natural scene semantic objects

- People with ASD who have a stronger image center bias regardless of object distribution

- Generally increased pixel-level saliency but decreased semantic-level saliency in ASD

- Reduced saliency for faces and locations indicated by social gaze in ASD

Resources

Paper

Shuo Wang*, Ming Jiang*, Xavier Morin Duchesne, Elizabeth A. Laugeson, Daniel P. Kennedy, Ralph Adolphs, and Qi Zhao, "Atypical Visual Saliency in Autism Spectrum Disorder Quantified through Model-based Eye Tracking," Neuron, Volume 88, Issue 3, Pages 604-616, November 2015.

[pdf] [bib]

(*Equal authorship)

Preview

Laurent Itti, "New Eye-Tracking Techniques May Revolutionize Mental Health Screening," Neuron, Volume 88, Issue 3, Pages 442-444, November 2015. [pdf]

Related Work

Juan Xu, Ming Jiang, Shuo Wang, Mohan Kankanhalli, and Qi Zhao, "Predicting Human Gaze Beyond Pixels," in Journal of Vision, Volume 14, Issue 1, Article 28, Pages 1-20, January 2014. [pdf] [bib] [project]

Media

- Fascinating images reveal how people with autism see the world. Business Insider, 29 October 2015.

- These Photos Show How The World Might Look To A Person With Autism. Huffington Post, 24 October 2015.

- Probing the mysterious perceptual world of autism. MedicalXpress, 23 October 2015.

- How people with autism see the world: Gaze of those with the condition bypasses faces to see details such as colour and contrast. Daily Mail, 23 October 2015.

- Gaze differs in autism, and not just for faces. Futurity, 22 October 2015.

- Probing the Mysterious Perceptual World of Autism. Caltech, 22 October 2015.