Abstract

This work presents a novel method for quantitative and objective diagnoses of Autism Spectrum Disorder (ASD) using eye tracking and deep neural networks. ASD is prevalent, with 1.5% of people in the US. The lack of clinical resources for early diagnoses has been a long-lasting issue. This work differentiates itself with three unique features: first, the proposed approach is data-driven and free of assumptions, important for new discoveries in understanding ASD as well as other neurodevelopmental disorders. Second, we concentrate our analyses on the differences in eye movement patterns between healthy people and those with ASD. An image selection method based on Fisher scores allows feature learning with the most discriminative contents, leading to efficient and accurate diagnoses. Third, we leverage the recent advances in deep neural networks for both prediction and visualization. Experimental results show the superior performance of our method in terms of multiple evaluation metrics used in diagnostic tests.

Resources

Ming Jiang and Qi Zhao, "Learning Visual Attention to Identify People with Autism Spectrum Disorder," International Conference on Computer Vision (ICCV), October 2017 [pdf] [bib] [poster]

People with Autism Spectrum Disorder Have Atypical Attention

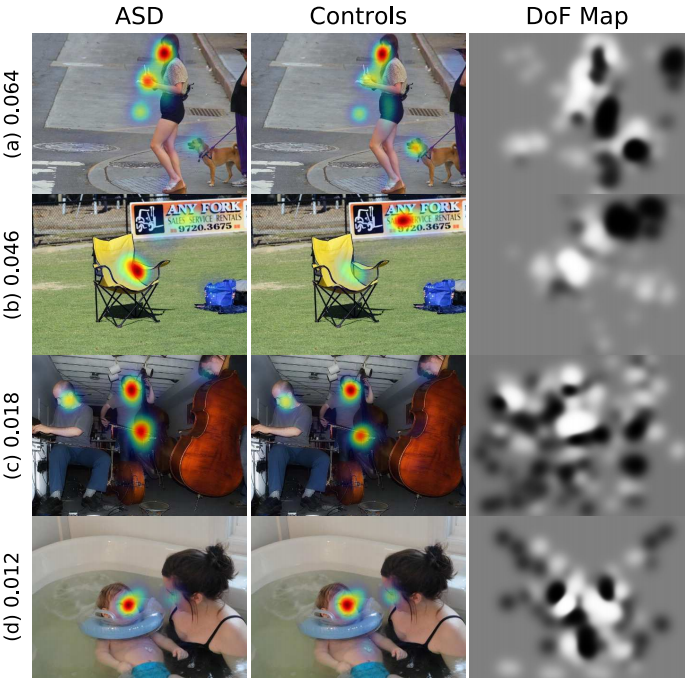

People with ASD have deficits in occulomotor control and visual attention. When viewing an image, they are less interested in social contents, avoiding eye contact and social communication. Instead, they are attracted by objects, colors, shapes and patterns, etc.

Image features attracting attention of people with ASD.

Image features attracting attention of people with ASD. Image features attracting attention of age-, sex-, and IQ-matched controls.

Image features attracting attention of age-, sex-, and IQ-matched controls.- It is generalizable to other neurodevelopmental disorders.

- It helps reducing the amount of data and time required in human experiment.

- It enriches scientific understanding of the atypical attention in ASD.

Example images and their Fisher scores.

Image Selection Based on Gaze Features

By using natural-scene stimuli and DNNs, the diagnostic process is completely data-driven. To reduce the data collection cost at test time, we first propose to select the most discriminative images based on the Fisher scores of gaze features. A difference of fixations (DoF) map is computed to indicate the difference of eye fixations between subjects with ASD and controls.

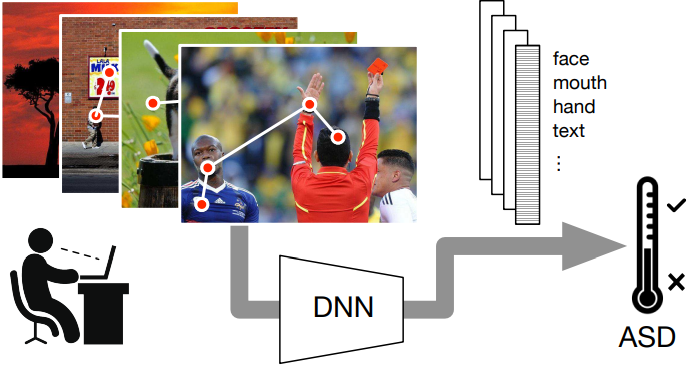

Characterize ASD with Deep Learning and Eye Tracking

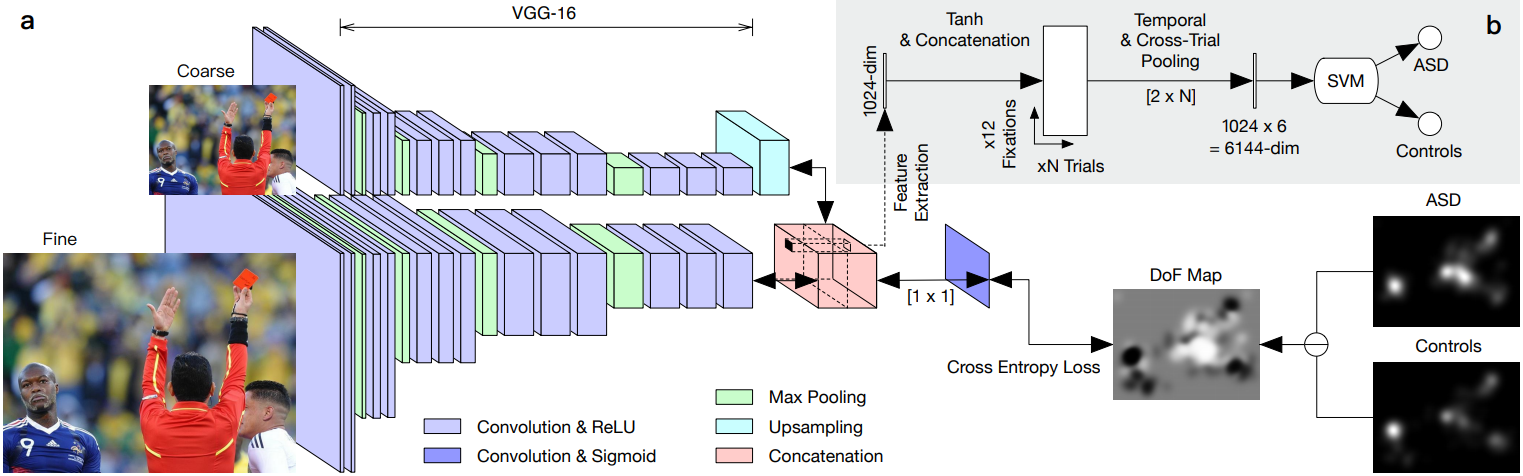

The proposed deep learning approach consists of two steps:

- (a) Discriminative image features are learned end-to-end to predict the difference of fixation maps.

- (b) Features at fixated pixels are extracted and integrated across trials, and then classified with an SVM.

Experimental Results

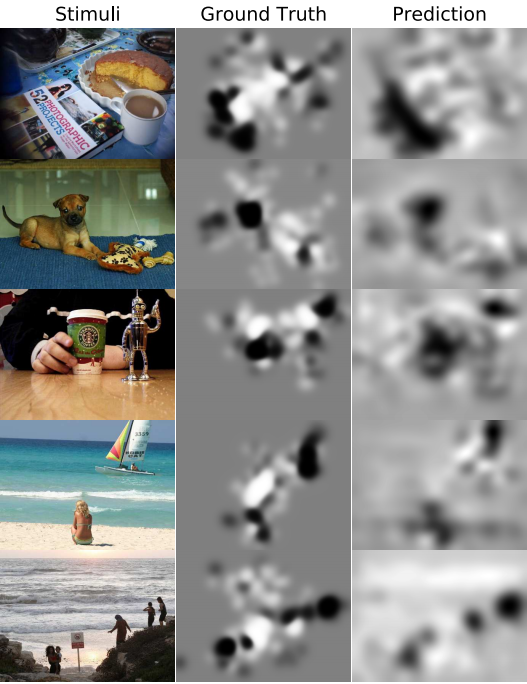

Predicting where ASD and control groups look in novel images.

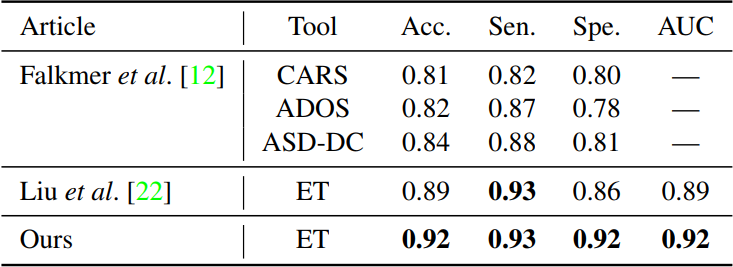

Quantitative comparison with current diagnostic tools ande the most related eyetracking (ET) study.

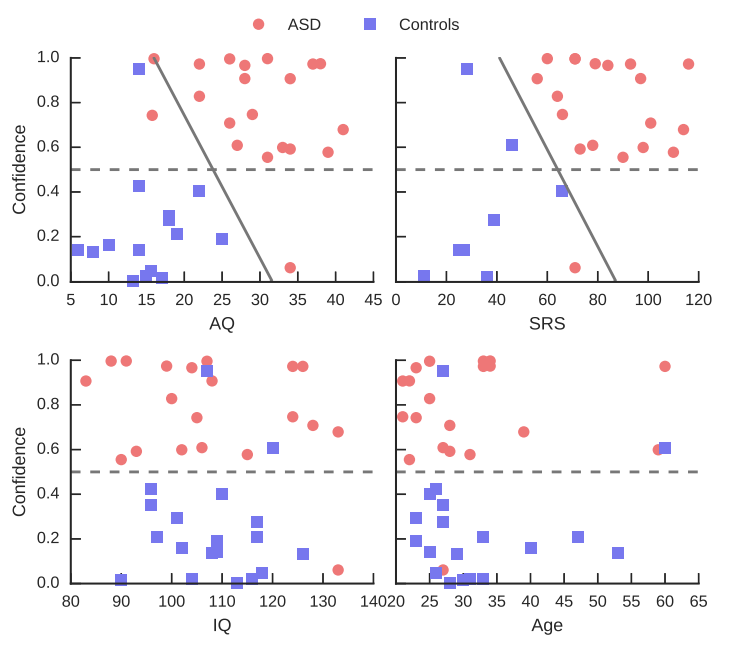

Classification confidence correlates strongly with the

subjects' AQ and SRS scores but not IQ or age. Dashed lines

indicate the classification boundary at 0.5. Solid lines indicate the

optimal classification boundaries integrating our model prediction

and the quotient-based evaluation.

Related Work

Shuo Wang*, Ming Jiang*, Xavier Morin Duchesne, Elizabeth A. Laugeson, Daniel P. Kennedy, Ralph Adolphs, and Qi Zhao, "Atypical Visual Saliency in Autism Spectrum Disorder Quantified through Model-based Eye Tracking," Neuron, Volume 88, Issue 3, Pages 604-616, November 2015. [pdf] [bib] [project] (*Equal authorship)

Juan Xu, Ming Jiang, Shuo Wang, Mohan Kankanhalli, and Qi Zhao, "Predicting Human Gaze Beyond Pixels," in Journal of Vision, Volume 14, Issue 1, Article 28, Pages 1-20, January 2014. [pdf] [bib] [project]