Spring 2018 CSCI 5980

Multiview 3D Geometry in Computer VisionMon/Wed 4:00pm-5:15pm @ Ford Hall B15

Description

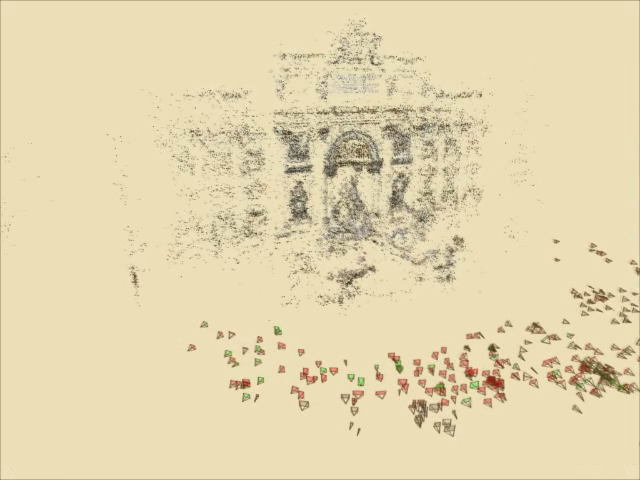

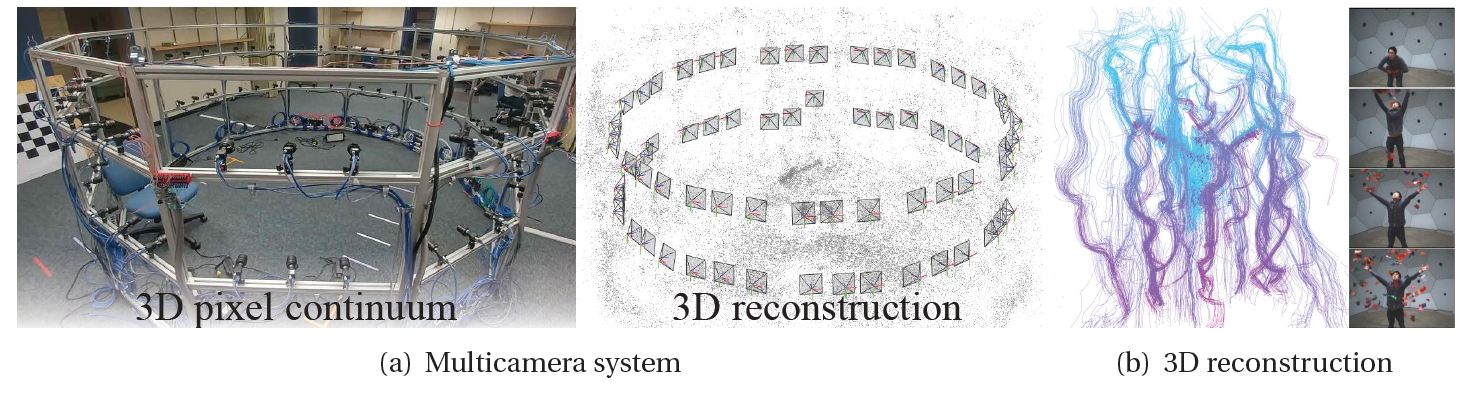

Multiple cameras are continually capturing our daily events involving social and physical interactions in a form of first person camera (e.g., google glass), cellphone camera, and surveillance camera. Multiview geometry is a core branch in computer vision that studies the 3D spatial relationship between cameras and scenes. This technology is used to localize and plan robots, reconstruct a city, e.g. Rome, from internet photos, and understand human behaviors using body-worn cameras. In this course, we will focus on 1) fundamentals of projective camera geometry; 2) implementation of 3D reconstruction algorithm; and 3) research paper review. The desired outcome of the course is for each student to have his/her own 3D reconstruction algorithm called "structure from motion". This will cover core mathematics of camera multiview geometry including perspective projection, epipolar geometry, point triangulation, camera resectioning, and bundle adjustment. This geometric concept will be then, in parallel, implemented to directly apply to domain specific research such as robot localization. This course includes a final term project that uses the multicamera system to build a creative system such as Robotics/AR/VR/Vision/Graphics.

InformationInstructor: Hyun Soo Park (hspark at umn.edu)

Syllabus: pdf

Moodle: https://ay17.moodle.umn.edu/course/view.php?id=11243

Office hour: Tue/Thr 5:00pm-6:00pm (Keller Hall 5-225E)

Prerequisite: Linear Algebra; MATLAB

Textbook: Not required but the following book will be frequently referred:

"Multiple View Geometry in Computer Vision", R. Hartley and A. Zisserman

I am working on writing a book based on this lecture:

Chapter 1 (Camera model)

Chapter 2 (Projective line)

Chapter 3 (Image transformation)

Slide

Topic

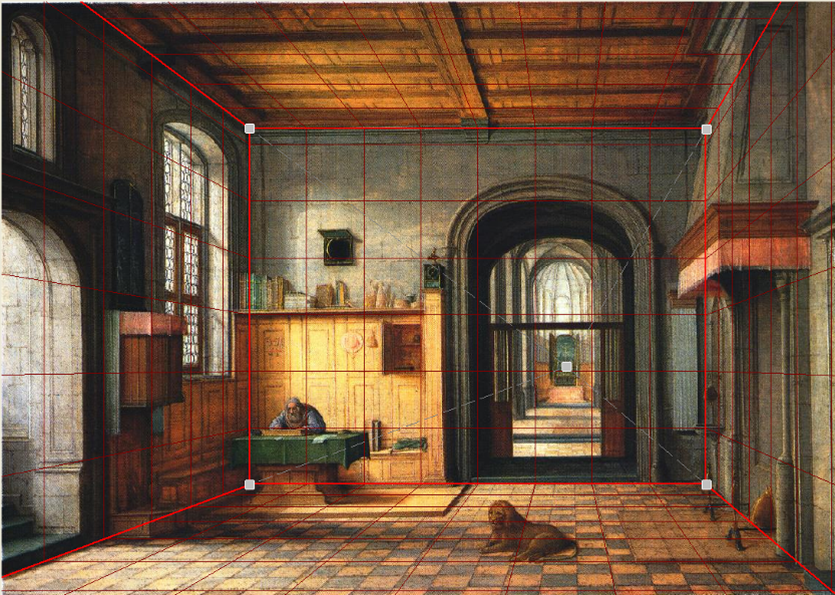

Single view Camera model

Camera projection matrix

Projective line

Single view metrology

Image transformation

Estimation I (Linear algebra)

Camera calibration

Rotation representation

Where am I? (vanishing lines)

Where am I? (pnp)

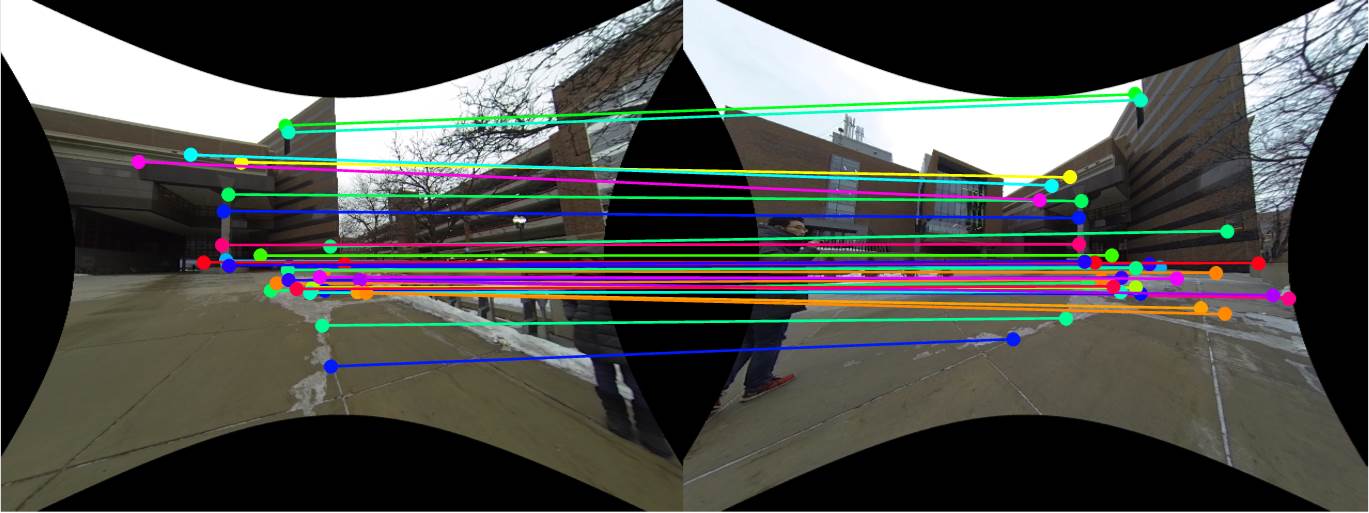

Multiview Epipolar geometry

Fundamental matrix

Triangulation and stereo

Feature and matching

Estimation II (robust modeling)

Estimation III (nonlinear optimization)

Bundle adjustment I (geometric error)

Bundle adjustment II (spare structure and analytic jacobian)

Bundle adjustment III (implementation)

Example codes can be found here.

MATLAB code50%: 5 programming assignments (10% each)

Grading policy

20%: exam

5%: project proposal

10%: project related work

15%: project final

Late submission: 20% off from each extra late day

Important dates

Guest lecture (Apr 18): Jaesik Park (Intel Labs)A team of students will write/present a computer vision conference paper throughout this lecture. Each team can include up to two students, and the subject is open to diverse applications, e.g., vision, graphics, robotics, AR/VR, and deep learning, but must be related to 3D geometry. The multi-camera system at Shepherd Laboratory can be used for your data capture.

Project

Most technical papers are composed of following sections: introduction, related work, method, data, results, and discussion (check out state-of-the-art vision papers).

+ The first milestone is teaming and potential idea discussion with the instructor by Feb 16.

+ Once approved, the project can be proposed in Mar 7 (Week 8). The project proposal includes the introduction write-up (1-1.5 pages) and data capture.

+ In the Week 12, the related work will be presented and submitted (1-1.5 pages)

+ In the Week 15, the final work will be presented and a full paper will be submitted (6-8 pages)

+ Project submission format (CVPR) can be found here

HomeworkScholastic misconduct is broadly defined as "any act that violates the right of another student in academic work or that involves misrepresentation of your own work. Scholastic dishonesty includes, (but is not necessarily limited to): cheating on assignments or examinations; plagiarizing, which means misrepresenting as your own work any part of work done by another; submitting the same paper, or substantially similar papers, to meet the requirements of more than one course without the approval and consent of all instructors concerned; depriving another student of necessary course materials; or interfering with another student's work."

Scholastic misconduct